Prevent This: Voice Phishing Attacks

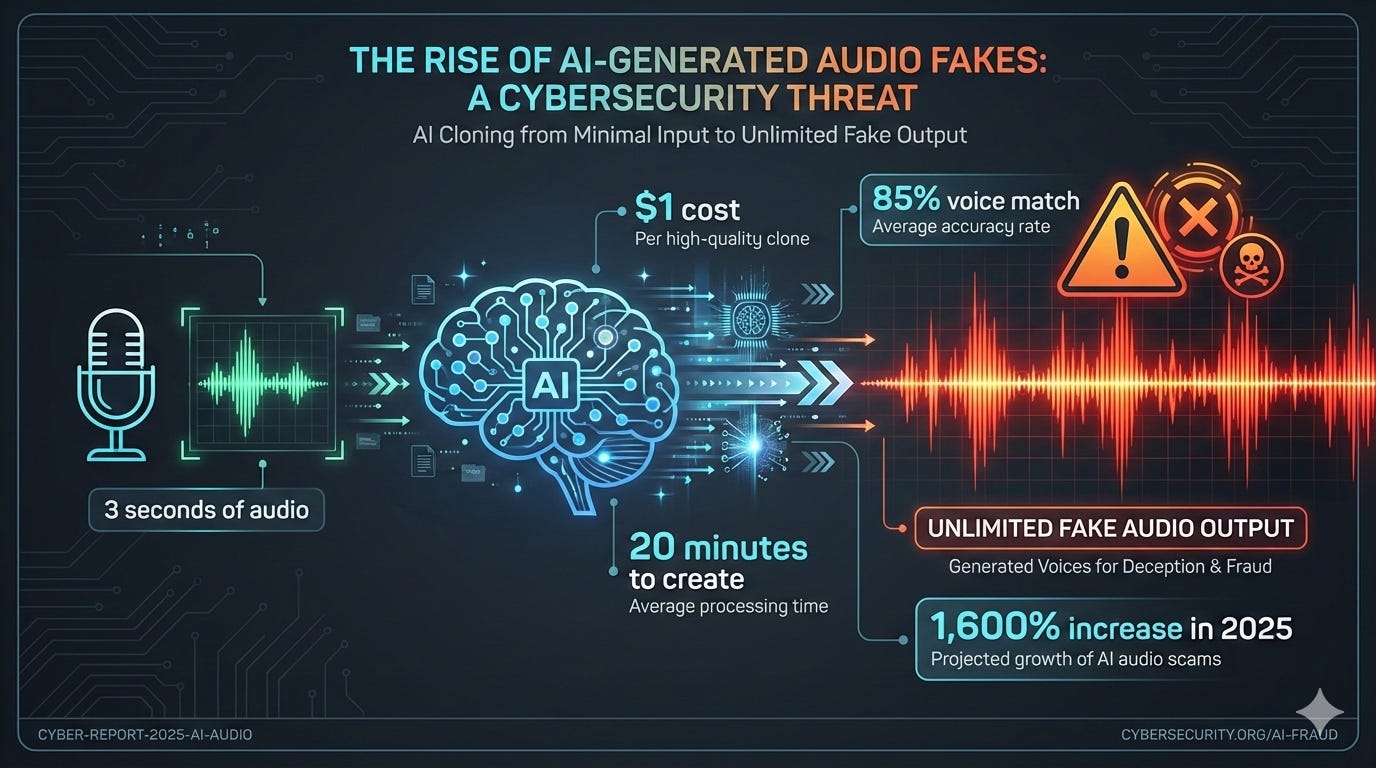

Three seconds of audio is all it takes to clone a voice with 85% accuracy. Take a few simple steps to prevent this increasingly popular attacks.

What Happened?

In February 2025, fashion designer Giorgio Armani received a call from Italian Defense Minister Guido Crosetto. The “minister” explained that kidnapped journalists needed ransom money. Could Armani wire €1 million to Hong Kong? The Bank of Italy would reimburse him.

Armani wasn’t alone. Prada’s Patrizio Bertelli, former Inter Milan owner Massimo Moratti, and members of the Beretta family all received identical calls. Attackers had cloned Crosetto’s voice from public speeches, and the calls appeared to originate from defense ministry phone numbers.

Moratti wired €1 million before realizing the scam. Police recovered the funds from a Dutch account, but not before international headlines.

The attacks accelerated throughout 2025:

A European energy company lost $25 million after attackers cloned the CFO’s voice for live wire transfer instructions

Threat group UNC6040 used a cloned CFO voice to steal $12 million from a Canadian insurance company

BlackBasta ransomware operators used deepfake calls to authorize malware deployment at a UK logistics firm

The Com syndicate breached Australian banks by spoofing vendor payment approvals

One large retailer now receives over 1,000 AI-generated scam calls daily, according to Pindrop.

Why Should You Care?

Modern AI clones voices from just three seconds of audio. A podcast clip, conference talk, or voicemail greeting is enough. The Biden robocall during the 2024 New Hampshire primary cost $1 and took 20 minutes to create.

The 2025 numbers:

Deepfake vishing up 1,600% in Q1 2025

30% of corporate impersonation attacks now use AI deepfakes

10%+ of banks have suffered deepfake losses over $1 million (average: $600K per incident)

1 in 4 adults have experienced an AI voice scam

Projected $40 billion in deepfake fraud losses by 2027

Deepfake-as-a-Service platforms made this accessible to anyone in 2025. No technical expertise required.

Families are targets too. In July 2025, Sharon Brightwell of Dover, Florida received a call from her “daughter” sobbing about a car accident. She wired $15,000 for “legal fees” before discovering her actual daughter was safe at home. The voice had been cloned from social media.

How Does This Work?

Voice cloning analyzes audio to learn the patterns that make a voice unique: pitch, cadence, accent, breathing. Once trained, the AI generates speech that sounds identical to the target saying whatever the attacker types.

The attacks follow predictable patterns involving urgency, secrecy, and unusual payment methods.

Corporate attacks: A cloned “executive” contacts finance with an urgent request. Deal closing, vendor payment, something sensitive. Attackers research org charts and relationships to make requests sound legitimate.

Family attacks: Someone calls sounding like your child or grandparent. They’re in trouble and need money immediately via wire transfer, gift cards, or crypto. Don’t tell anyone because they’re embarrassed or threatened.

The psychology is simple: when we hear a familiar voice in distress, fear overrides critical thinking

What Can You Do?

For Organizations:

Stop treating voice or video as verification for financial transactions. If cloning costs $1, voice is a vulnerability, not a control.

Require multi-person authorization for wire transfers. No single employee should control financial outflows end-to-end.

Establish callback procedures using known numbers, not caller-provided ones. If your “CFO” requests an urgent transfer, hang up and call back on a verified number.

Run actual drills, not just awareness training. Employees need to practice responding when someone who sounds exactly like their boss pressures them to act.

For Families:

Create a family safe word. Something random that isn’t on social media, shared only in person. “Purple dinosaur pancakes” works. Your pet’s name doesn’t.

Critical: never give up the safe word when asked. Attackers will say “I’m scared and forgot our password, remind me?” If they ask for it instead of providing it, hang up.

When in doubt, hang up and call back using the number you have saved. Caller ID can be spoofed.

The Bottom Line

Voice and video are no longer proof of identity. Technology insights company Gartner predicts 30% of enterprises will abandon standalone identity verification by end of 2026.

A family safe word costs nothing and takes two minutes. It could be the difference between losing your savings and hanging up on a scammer.

Next time someone calls sounding like your boss or your child with an urgent money request, remember: your ears are lying. Verify through a separate channel first.

Research Sources: Cyble Executive Threat Monitoring 2025, Right-Hand Cybersecurity Vishing Report, Euronews/Bloomberg Italy Coverage, McAfee 2025 Reports, FBI IC3, Keepnet Labs, Pindrop, Group-IB

Last Updated: January 5, 2026